Evaluation Metric [code]¶

- Metric: We choose the metric accuracy, requiring at least 75% intersection over union (IoU) with the ground truth for a detection to be a true positive. It is defined as follows:

- Leaderboard Score: The ranking score for the challenge is the average of the accuracy on both volumes (Total_Accuracy in the leaderboard). Furthermore, each user should see different evalution metrics in the submission results such as precision, recall, accuracy and panoptic quality. All these metrics are measured by small, medium and large categories to have a better understanding of the performance.

- Evaluation toolbox: All measurements performed in the challenge were done using TIMISE toolbox.

Submission Format [code]¶

The challenge accepts HDF5 files for submission. (1) A valid submission should have two separate HDF5 files, each containing the mitochondria instance segmentation result for the test split (slice 500-999) of one volume.

Please name your files as follows:

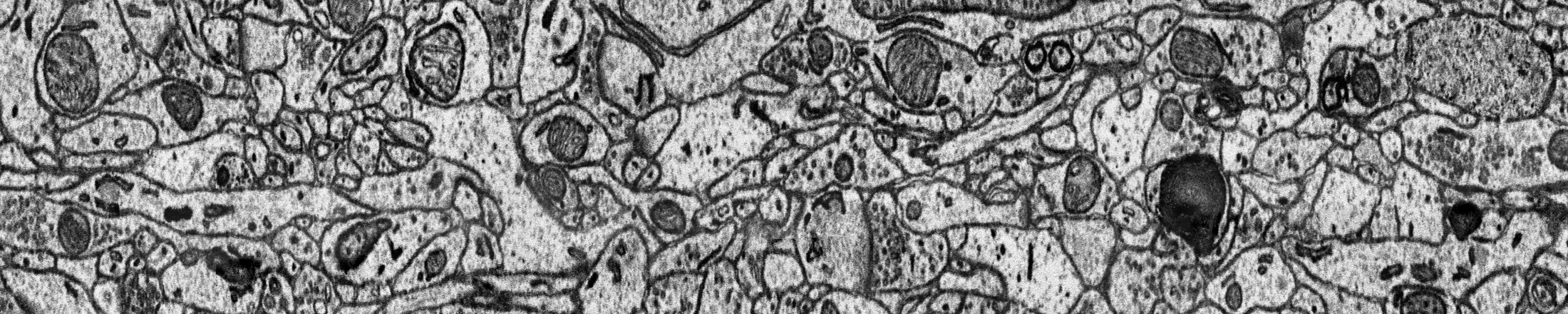

MUST: instance segmentation result for both volumes (500x4096x4096 each):

- 0_human_instance_seg_pred.h5: H5 file containing the instance segmentation results on Human volume

- 1_rat_instance_seg_pred.h5 : H5 file containing the instance segmentation results on Rat volume

Note: as only one file needs to be submmited you can zip all .h5 files into just one .zip file.